AI-powered tool for designers

The project was an independent sub-part of the Big Fun Research project at Volvo Trucks and my project group and I worked on it during the spring of 2023. I was the lead of our sub-project and in charge of executive decisions, communication and documentation.

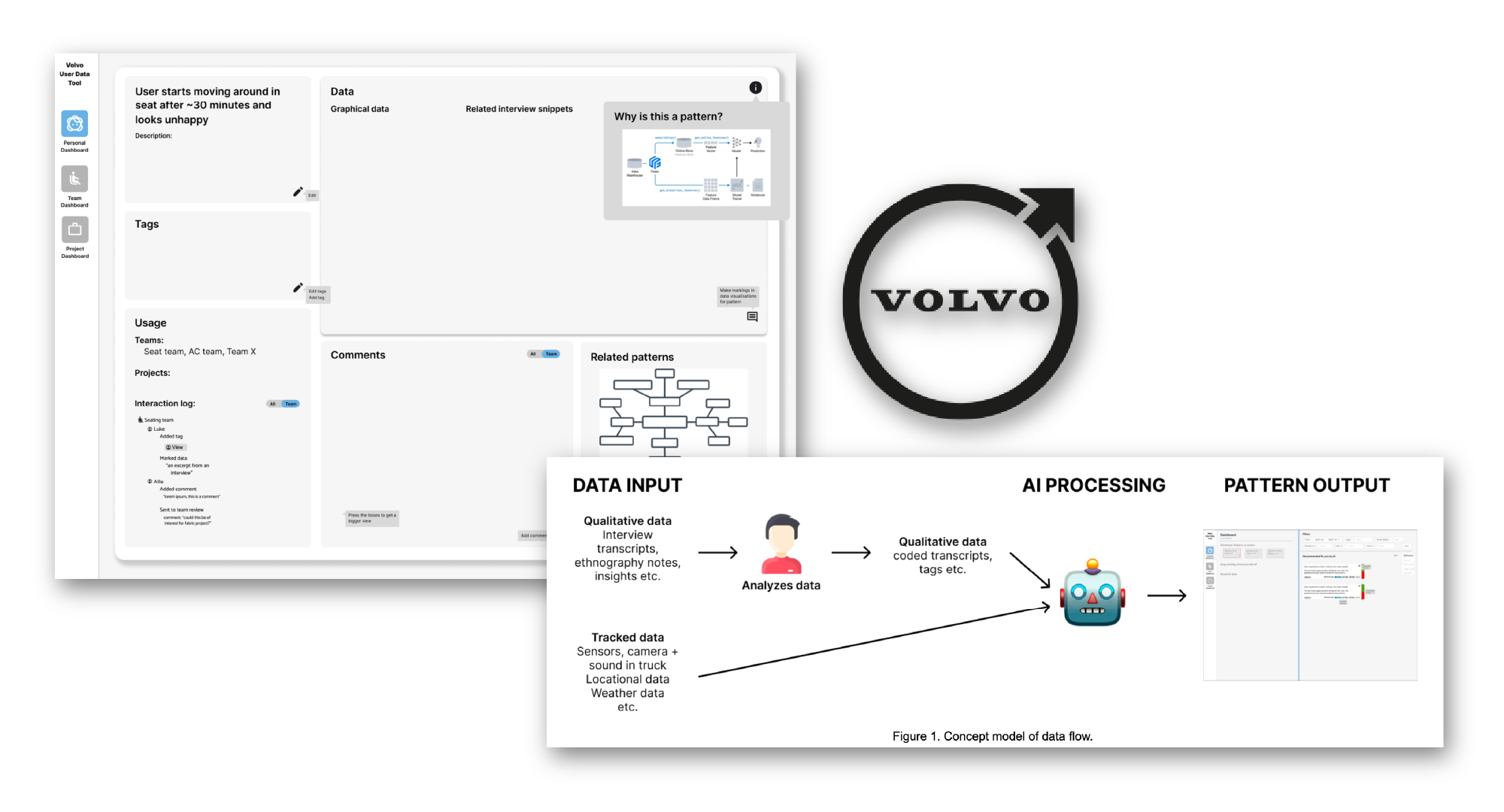

The goal was to use design as a research tool and extend knowledge of how AI can be used to empower designers in their design work. A tool for designers was prototyped based on that AI can support designers in persona creation by identifying behavioural patterns.

Through the process, we worked with different mental frameworks to put focus on aspects like trust, currency, ethics, autonomy and privacy etc. I extended my understanding of the complexity that comes with the design of AI, like that the level of trust and autonomy in the AI needs to be intentionally designed.

I was the lead of our sub-project and in charge of managing it. I planned and scheduled meetings with the project group and with peers who continuously gave feedback to the project.

I was also in charge of our project documentation - a crucial step in research through design because it makes it possible to follow the trail of knowledge creation.

I took the responsibility of designing our process. It is a responsibility I enjoy taking because it means that I can make sure to not leave any stones unturned and that things are done purposefully.

For this project our process was focused on design work, evaluations and collective reflection sessions.

Because the project description was broad, we had to make decisions on how to narrow it down as it came along. It was done through discussions in the project group, but as the project lead it was my responsibility to make sure the executive decisions were grounded and that we kept following our decided trail.

To understand the context to design for and to learn what is already known in the area I performed two activities:

.png)

I participated in a facilitated interview with Volvo trucks representatives to learn about the project purpose and how designers work today.

I studied literature on AI in the work-place, AI for design work and AI in commercial mobility.

Putting ideas down on a paper in models and sketches worked as my method of learning. When I did so I could identify problems and new perspectives that I previously had not thought about.

I designed models of data flow, designers' work journeys etc. I also sketched interfaces and service blueprints of human-AI interaction which ultimately led to me learning about how AI and designers can work together.

Design itself does not lead to extended learning - only if it couples with reflections on the design. I was therefore continuously facilitating reflective sessions where the focus was to have all members of the project group share their thoughts on our design in detail and the bigger picture.

The project resulted in 10 points of knowledge on how an AI tool can be designed to help designers to identify and trust in AI-detected behavioural patterns. These points were formatted into design suggestions and were implemented in an interface for such a tool.

Persona creation involves different steps (data collection, analysis, creation, visualisation) which consequently would mean that for AI to create personas they need to go through these steps.

Having AI work in many consecutive steps without human involvement creates a lack of transparency into how it works, what data it uses and how data is interpreted. This could lead to mistakes being more difficult to detect.

Designing more narrow AI with single purposes gives humans more control over the outputs.

A lot of research regards how to design to have people trust in AI or in AI outputs. During the project we discussed this topic a lot and found that distrust in AI is important too, and perhaps something that should not entirely be sought to design away.

We were discussing intentionally designing for a lower level of trust in the AI, because if too much trust is put on the AI it might be given the responsibility to make decisions.

Forward to: trusting in AI → handing over responsibility in making decisions → AI making decisions. If that is not a scenario we want, perhaps we should not design AI in a way that makes people trust in it.

Initially we were intrigued by the idea of using real-time data to create the behavioural patterns to have them always up-to-date and constantly adapting to changes in user behaviour.

However, we learned that time is a very important aspect of behavioural patterns in design. If real time data always goes straight into a data set that underlies behavioural patterns it creates challenges.

- It could be hard to get a consensus in the research if things are constantly changing.

- After a longer period of time, it would be more difficult to distinguish new patterns if all data is mashed together, and it would perhaps take longer time for new patterns to break off from preexisting ones.

- From a business perspective it would also become challenging to use behavioural patterns to motivate design if they could be changing over time.

We learned that time would be an interesting direction for future research into this area.